1. Why PCA?

We often deal with high-dimentional data sets. Using PCA is handy for eliminating dimenstions

2. Application: Background Removal

There is a video, we want to remove the background

2.1. Load the Data

We can scale the image to $60\times 80$, and stack the image to a tall column $1\times 4800$

If the video contains $11300$ images, then the video can be represented as a $11300\times 4800$ matrix

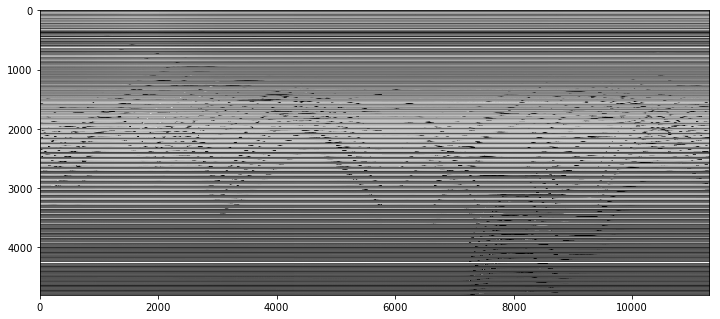

3. First Attempt: SVD

Preserver only 2 feature

1 | from sklearn import decomposition |

Great, we got a good result

4. PCA

Classical PCA (SVD) : seeks the best rank-$k$ estiamte $L$ (Low Rank) of $M$, this is what we do above

Minimizing $||M-L||$

Can only handle small noise

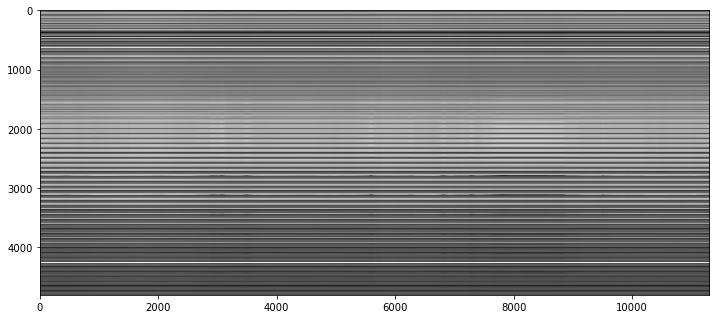

Robust PCA, factors a matrix into the sum of 2 matrices, $M=L+S$, Low rank + Sparse

Low rank: there is a lot of redundant information, in this case, is the background

Sparse: without the background, only people in the image, that means most of the elements in $S$ would be zero

4.1. Robust PCA

Goal:

$$

minimize ||L|{*}+\lambda|S|{1} \

subject\ to \ L+S=M

$$

- $||\space||_1$ is the L1 norm, for a matrix, L1 norm equals to the maximum absolute column norm

- Why L1 norm? we need $S$ to be sparse, and L1 norm would lead to better sparsity

- Why L1 lead to better sparsity? See another blog..

- $||\space||_*$ is the nuclear norm, is the L1 norm of the singular values, minimize the result will lead to low rank