1. Overview

Apple use Face ID as an unlocking method first in iPhone X, it use a bunch of technology to improve its performance.

- Front facing depth-camera

- infrared camera

- Deep learning

I was so interested in this tech especially how can Apple apply deep learning with little data and limited compute resource in mobile phone.

2. How it Works?

Apple release a whitepaper about Face ID, so we can learn something from it.

The whole security system is called TrueDepth camera system

- map the geometry of your face

- confirms attention by detecting the direction of your gaze

As for users, they need to do something similar to Touch ID:

- Register users’ face

- Look at the phone

- slowly rotate the head

- DONE!

As we can see, the process is pretty simple and quick.

The problem here for normal classification procedure are

- The raw data is limited

- Computing resource is limited

- Lack of negative samples

So I think Apple use another method to solve this problem

3. Possible Way

Above all, I think extracting features on faces is the very first thing to do. In this way, device can get a small vector rather than a huge image to train some classification or neural networks in a short time

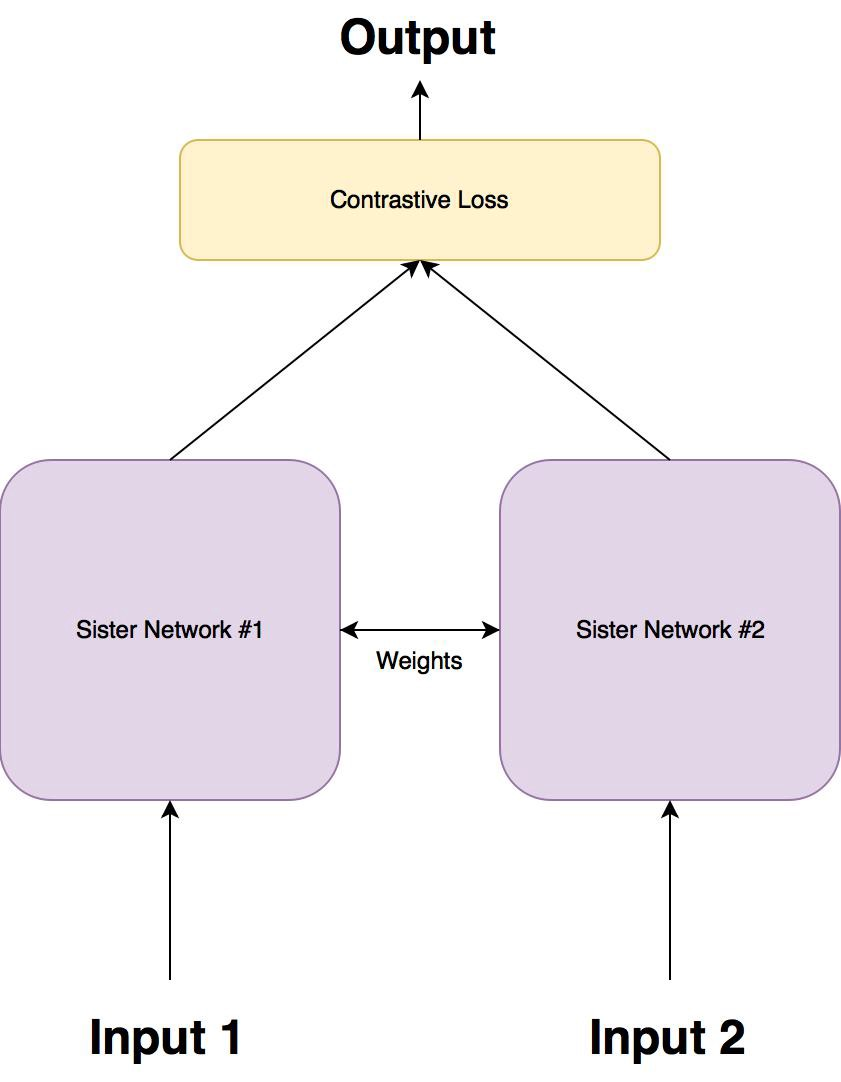

siamese-like convolutional neural network might be a good way to do the job

Two network share the weights

The main purpose of this work is to value the similarity of two input

So Apple can

- pre-train such a model that can detect different feature in users’ face

- Get users’ face when they register Face ID and set it as input 1

- When users need to unlock their devices or use Face ID to purchase something, the camera get a image as input 2

- Calculate the similarity between input 1 and input 2