1. Conclusion

- Robot-Human Interaction & Collaboration: This theme is prevalent throughout the seminars, emphasizing the need for more natural and efficient interactions between robots and humans. This covers areas like haptic feedback, interaction through touch, remote presence systems, and even the ethical considerations of autonomous systems.

- Autonomous Systems & Self-learning: There’s a substantial focus on making robots more autonomous and adaptable through the use of learning algorithms. This includes seminars on autonomous vehicles, field-hardened robotic autonomy, self-supervised networks, and machine learning for robots.

- Manipulation & Grasping: The issue of creating robotic systems that can effectively interact with their physical environment is clearly important. There are numerous seminars focused on designing grippers, in-hand manipulation, and incorporating tactile sensors for better performance in various tasks.

- Robotic Design & Fabrication: A number of seminars are dedicated to the development and design of novel robotic systems. This includes topics such as designing bioinspired aerial robots, flexible surgical robots, shape-changing displays and robots, as well as pressure-operated soft robotic snakes.

- Safety & Robustness: There’s a clear emphasis on making robots more robust and safer, whether it’s through perception-based control, modeling, planning, reachability, or learning in uncertain environments. This theme is especially relevant for autonomous vehicles and robots operating in dynamic or challenging environments.

- Perception & Sensing: Multiple seminars focus on enabling robots to perceive, understand, and make sense of their environments. This includes topics such as spatial perception, tactile sensing, self-supervision for robotic learning, and incorporating semantics into robot perception.

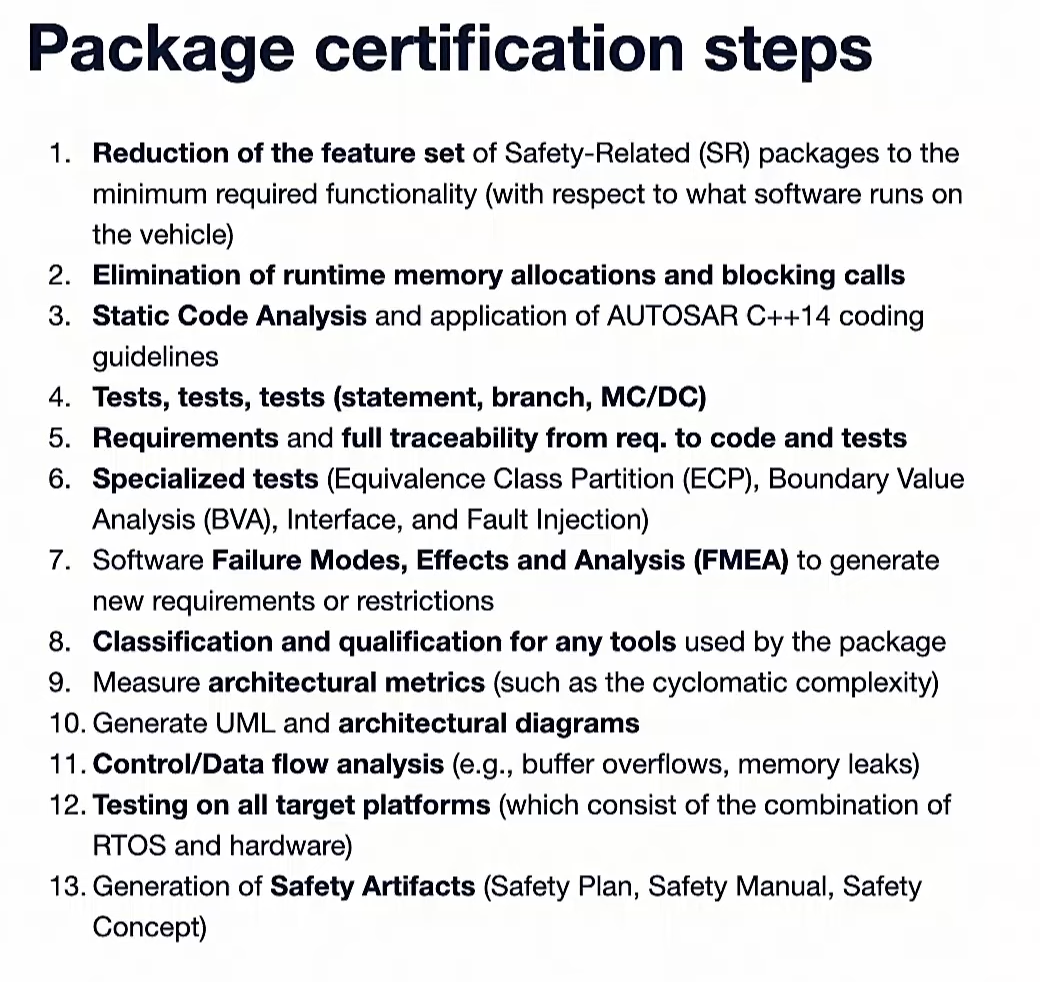

- Software & Simulation: The transition from open-source to safety-certified software and the use of simulations for testing and training robots are also recurring themes. This also includes discussions on distributive representations and scalable simulations for Real-to-Sim-to-Real with deformables.

- Applications: There is a range of seminars focusing on specific applications of robotics, from logistics and surgical assistance to ocean exploration and space.

2. Control-Oriented Learning for Dynamical Systems.

The seminar is about “Control-Oriented Learning for Dynamical Systems.” It focuses on the interplay between learning and control, particularly in the context of robotics.

2.1. One or more of the research problems the speaker’s work address

- Difficulty in modeling robotic systems operating under different conditions, such as icy roads, wind conditions, and contact situations.

- The limitations of naive regression for closed-loop control tasks that happen over long time horizons.

- The need for a learning approach that is more adaptable and efficient for closed-loop control.

2.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes “control-oriented learning,” which conditions the model to perform better in closed-loop control.

- This approach involves three different lines of work:

- Translating the concept of stabilizability into algebraic conditions used as a regularizer for closed-loop control performance during offline learning.

- Structuring the model appropriately offline to yield a useful control close-up controller based on Linear Quadratic Regulator (LQR).

- Using meta-learning to learn a good extension to a nominal model for adaptive control.

- These methods work because they focus on the downstream control objective rather than just the model fitting objective. They also leverage the inherent structure of the systems to enable control design.

2.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question: Are you testing on like quadcopters throughout and is it dependent on how linear the Dynamics of the Drone is the results you’re showing here?

- Answer: The method is pretty agnostic to the system. It was tested on a quadcopter for one project, but it can be applied to other systems as well. The difference in performance between the method and baselines increases with the nonlinearity of the system.

- Question: Are there a certain a priori structure you impose on that class of functions when regressing over models F to learn?

- Answer: In the first project, the system is assumed to be control affine (f of x plus b of x times U). There are some assumptions about the system that simplify the application of inequality conditions, such as some sparsity in that b of X.

- Question: What would happen if the Dynamics that you’re trying to learn are not actually controllable or maybe there is some subset of the states that are controllable and some that are uncontrolled?

- Answer: You would have to find some way of dividing those things out. In linear systems, you can look at ideas like common decomposition where you can break down the controllable states from the uncontrollable states.

2.4. Takeaway, the most important takeaway

- Control-oriented learning can significantly improve the performance of robotic systems operating under different conditions.

- This approach is more adaptable and efficient than traditional methods, focusing on the downstream control objective rather than just the model fitting objective.

- The speaker’s work demonstrates that this method outperforms naive and certificate-based methods in terms of sample efficiency and performance, and can even beat the oracle when enough data is available.

3. Aligning Human and Robot Representations

The seminar is about “Aligning Human and Robot Representations.” It focuses on the challenge of aligning the understanding and interpretation of tasks between humans and robots, particularly in the context of human-robot interaction.

3.1. One or more of the research problems the speaker’s work address

- Difficulty in aligning human and robot representations, leading to misinterpretation of tasks and suboptimal performance.

- The limitations of traditional learning methods that require handcrafted representations or end-to-end deep learning methods that generalize poorly.

- The need for a learning approach that can efficiently learn from human supervision and adapt to different human capabilities and preferences.

3.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes a “divide and conquer” approach to robot learning, focusing first on learning the representation from human input before learning the downstream task.

- The approach involves learning features one at a time from human supervision, using a method called “feature traces” where the human provides a sequence of states with monotonically decreasing feature values.

- The robot can then use these feature traces to learn a feature function, and this method has been shown to generalize well to more complex settings, including perceptual features and motion features.

- The speaker also introduces a method for the robot to autonomously detect when its representation is misaligned with the human’s, using a model that estimates the robot’s confidence in explaining the human’s input.

- These methods work because they leverage human input in a structured and efficient way, allowing the robot to learn and adapt to human preferences and capabilities.

3.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question: Are you learning these features one by one?

- Answer: Yes, the features are learned one at a time from human supervision using a method called “feature traces.” This allows the robot to focus on learning each feature individually, which can lead to more accurate and generalizable learning.

- Question: How easy is it to combine these features? Are you assuming that they are starting from the same value?

- Answer: The features are normalized to be between zero and one, which makes the reward learning easier. However, this is not mandatory and the features do not have to start from the same value. The important thing is that the features need to be positive.

3.4. Takeaway, the most important takeaway

- The “divide and conquer“ approach to robot learning can significantly improve the alignment of human and robot representations, leading to better performance and adaptability in human-robot interaction.

- This approach allows robots to learn from human supervision in a structured and efficient way, and to autonomously detect when their representation is misaligned with the human’s.

- The speaker’s work demonstrates the potential of this method in various applications, including personal robotics, semi-autonomous driving, and shared autonomy, and highlights the importance of aligning representations in successful human-robot interaction.

4. Connecting Robotics and Foundation Models

The seminar is about the integration of machine learning and robotics, specifically focusing on the use of deep learning for robotic manipulation tasks.

4.1. One or more of the research problems the speaker’s work address

- The speaker addresses the challenge of applying machine learning to real-world robotic tasks. This includes issues such as the variability of real-world environments and the difficulty of collecting sufficient training data.

- The speaker also discusses the problem of transferring learned skills from one task or environment to another, which is a major challenge in robotics.

4.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes a novel approach that combines deep learning with reinforcement learning. This approach allows the robot to learn from its own experiences, rather than relying solely on pre-programmed instructions.

- The speaker’s solution works because it allows the robot to adapt to new situations and tasks. It also reduces the amount of training data required, as the robot can learn from its own trial-and-error experiences.

4.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question:

How does the robot learn from its own experiences?

Answer: The robot uses a combination of deep learning and reinforcement learning. It learns to associate its actions with the resulting changes in its environment, and it adjusts its behavior based on the outcomes of its actions.

Question:

How does the robot handle new tasks or environments?

- Answer: The robot uses a technique called transfer learning. This allows it to apply the skills it has learned in one context to new contexts.

4.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the potential of combining machine learning and robotics. This approach allows robots to learn from their own experiences and adapt to new tasks and environments, which is a major step forward in the field of robotics.

5. Robots in Dynamic Tasks: Learning, Risk, and Safety

The seminar is about the development and application of Robotic Manipulation in real-world scenarios.

5.1. One or more of the research problems the speaker’s work address

- Complexity of Real-world Manipulation: The speaker addresses the challenge of designing robots that can manipulate objects in the real world, which is a complex and unpredictable environment.

- Lack of Generalization: Current robotic systems struggle to generalize their learning to new tasks or environments.

- Safety and Efficiency: Balancing the need for robots to perform tasks efficiently while ensuring safety, especially when interacting with humans.

5.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- Deep Reinforcement Learning: The speaker’s work involves the use of deep reinforcement learning to train robots. This approach allows robots to learn from their mistakes and improve their performance over time.

- Sim-to-Real Transfer: The speaker also discusses the use of simulation environments to train robots before deploying them in the real world. This approach reduces the risk of damage to the robot or its environment during the learning process.

- Multi-task Learning: The speaker’s solution involves training robots on multiple tasks simultaneously. This approach increases the robot’s ability to generalize its learning to new tasks.

5.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Q

: How does deep reinforcement learning work in robotic manipulation?

A: Deep reinforcement learning involves training a robot to make decisions based on its current state and the reward associated with different actions. The robot learns to improve its performance by maximizing the reward it receives over time.

Q

: What is sim-to-real transfer?

A: Sim-to-real transfer involves training a robot in a simulated environment before deploying it in the real world. This approach allows the robot to learn complex tasks without the risk of causing damage.

Q

: How does multi-task learning improve a robot’s performance?

- A: Multi-task learning involves training a robot on multiple tasks simultaneously. This approach allows the robot to generalize its learning to new tasks, improving its overall performance.

5.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the potential of deep reinforcement learning, sim-to-real transfer, and multi-task learning in advancing the field of robotic manipulation. These approaches allow robots to learn complex tasks, generalize their learning to new tasks, and perform safely and efficiently in real-world environments.

6. Open-Source to Safety-Certified

How to speed up?

Zero-copy memory

7. Adaptable Robotic Manipulation Using Tactile Sensors

The seminar is about adaptable robotic manipulation using tactile sensors. The speaker discusses the challenges of integrating robots into our daily lives, particularly in contact-rich environments such as kitchens, healthcare, and e-commerce pipelines. The speaker presents their research on developing tactile sensors for robots to handle contact events better, focusing on two specific projects: in-hand manipulation using multimodal slip sensing and haptic exploration and grip monitoring of a suction cup gripper.

7.1. One or more of the research problems the speaker’s work address

- Robots struggle in contact-rich environments due to their inability to handle contact events well.

- The unpredictable nature of contact, including unknown friction coefficients and inertia of objects, makes adaptive control challenging.

- Tactile sensors, while developed and commercially available, are not often seen in robots due to difficulties in generalization across different robot hardware, tasks, and contact physics.

7.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker developed a compact multimodal sensor using a single transducer, called the “nib array sensor“, capable of monitoring linear and rotational slip in a more generalizable manner. This sensor can detect normal pressure, shear force, and vibrations, which are useful for estimating slip direction.

- The speaker also developed a “smart suction cup“ that can directly measure local contacts without interfering with suction seal formation. This is achieved by redesigning a single bellows suction cup to have internal four chambers connected to pressure sensors. The sensors measure the leakage flow through each chamber, providing valuable information about the contact state.

- The speaker’s solutions work because they directly address the challenges of contact-rich environments. The nib array sensor can adapt to changing contact conditions, while the smart suction cup can find the best contact location and monitor the grip during manipulation.

7.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question: How does the nib array sensor differentiate between linear and rotational slip?

- Answer: The sensor measures the capacitance between electrodes and a grounded conductive fabric. When the nib vibrates due to slip, it causes a directional capacitance change. By observing the direction of this change, the sensor can estimate the direction of the slip.

- Question: How does the smart suction cup work?

- Answer: The smart suction cup has internal four chambers, each connected to a pressure sensor. These sensors measure the leakage flow through each chamber, providing information about the contact state. This design allows the cup to measure local contacts without interfering with the suction seal formation.

- Question: What are the main challenges in expanding the use of tactile sensors in robots?

- Answer: The main challenges include the wiring and processing of large tactile arrays. Current approaches often involve a central processor querying the tactile sensing information, which may not be efficient for large arrays. A shift towards a system more like the human nervous system, where sensors inform the central processor when they have a stimulus, may be more efficient.

7.4. Takeaway, the most important takeaway

The most important takeaway from the seminar is the potential of tactile sensors in improving the adaptability and functionality of robots in contact-rich environments. The speaker’s research on the nib array sensor and the smart suction cup demonstrates innovative approaches to overcoming the challenges of contact events in robotics. These developments could pave the way for more widespread integration of robots into our daily lives.

8. Multi-Sensory Neural Objects: Modeling, Inference, and Applications in Robotics

The seminar is about “Multi-Sensory Neural Objects: Modeling, Inference, and Applications in Robotics”. The speaker discusses the development and application of multi-sensory neural objects in the field of robotics, focusing on the challenges and solutions associated with modeling, inference, and practical applications.

8.1. One or more of the research problems the speaker’s work address

- The difficulty in integrating multiple sensory inputs into a single, coherent model that can be used effectively in robotics.

- The challenge of creating a model that can infer and predict the behavior of objects in the environment based on sensory inputs.

- The need for more efficient and accurate methods of applying these models in real-world robotics applications.

8.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes the use of neural networks to create a multi-sensory model. This model can integrate inputs from various sensors and generate a unified representation of the environment.

- The model uses machine learning techniques to infer and predict the behavior of objects, improving the robot’s ability to interact with its environment.

- The speaker’s solution works because it leverages the power of neural networks and machine learning, which are capable of handling complex data and making accurate predictions.

8.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question

: How does the multi-sensory model integrate different types of sensory data?

Answer: The model uses neural networks to process and combine the data from different sensors, creating a unified representation of the environment.

Question

: How does the model predict the behavior of objects?

Answer: The model uses machine learning techniques to analyze the sensory data and make predictions about how objects in the environment will behave.

Question

: How can this model be applied in real-world robotics applications?

- Answer: The model can be used to improve a robot’s ability to interact with its environment, for example, by helping it to navigate or manipulate objects more effectively.

8.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the potential of multi-sensory neural objects in robotics. By integrating multiple sensory inputs into a single model and using machine learning to infer and predict object behavior, robots can interact with their environment more effectively and accurately. This approach represents a significant advancement in the field of robotics.

9. Why would we want a multi-agent system unstable

- The seminar is about the concept and implications of creating unstable multi-agent systems in the field of robotics and AI. The speaker discusses the potential benefits and challenges of such systems.

9.1. One or more of the research problems the speaker’s work address

- The speaker addresses the problem of stability and predictability in multi-agent systems. Traditional systems aim for stability, but this can limit their adaptability and responsiveness.

- The speaker also discusses the challenge of designing systems that can handle unexpected situations or changes in the environment.

9.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes intentionally designing multi-agent systems to be unstable. This instability allows the system to adapt and respond to changes more quickly.

- This approach works because it allows each agent in the system to operate independently and make decisions based on its own observations and experiences, rather than relying on a central control system.

9.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Question:

Why would we want a multi-agent system to be unstable?

Answer: An unstable system can adapt and respond to changes more quickly than a stable one. It allows each agent to operate independently and make decisions based on its own observations and experiences.

Question:

Isn’t instability a bad thing in a system?

- Answer: Not necessarily. While stability is often desirable, it can also limit a system’s adaptability and responsiveness. In some cases, a degree of instability can be beneficial.

9.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the idea that instability in a multi-agent system can be a strength rather than a weakness. This approach can potentially lead to more adaptable and responsive systems, although it also presents new challenges in terms of system design and control.

10. Computational Design of Compliant, Dynamical Robots

- The seminar focuses on the computational design of compliant, dynamical robots. The speaker, Cynthia Sung, discusses her research and work in the field of robotics, particularly in the design and development of robots that are compliant and dynamic.

10.1. One or more of the research problems the speaker’s work address

- The primary research problem addressed in the seminar is the challenge of designing robots that are both compliant (flexible in their interactions with the environment) and dynamic (capable of complex, high-speed movements).

- The speaker also discusses the difficulties in creating robots that can adapt to a variety of tasks and environments, as well as the challenges in making these robots accessible and usable for non-experts.

10.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker’s approach involves using computational methods to design and optimize robots. This includes the use of algorithms and software tools that can automatically generate robot designs based on specified tasks and constraints.

- The speaker also emphasizes the importance of designing robots that are compliant and dynamic, as this allows them to be more versatile and adaptable in their tasks.

- This approach works because it leverages the power of computation to automate and optimize the design process, making it easier to create complex, high-performance robots.

10.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Q: How can we design robots that are both compliant and dynamic?

- A: This can be achieved through computational design methods, which can automatically generate and optimize robot designs based on specified tasks and constraints.

- Q: How can we make robots more accessible and usable for non-experts?

- A: One approach is to develop intuitive software tools that allow non-experts to easily design and customize their own robots.

10.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the potential of computational design methods in the field of robotics. By using these methods, we can automate and optimize the design process, making it easier to create robots that are compliant, dynamic, and adaptable to a variety of tasks and environments. This not only advances the field of robotics, but also makes robots more accessible and usable for non-experts.

11. Deploying Autonomous Service Mobile Robots, And Keeping Them Autonomous

The seminar focuses on the deployment of autonomous service mobile robots and the challenges and solutions to keep them autonomous in real-world environments.

11.1. One or more of the research problems the speaker’s work address

- The speaker addresses the problem of maintaining the autonomy of service mobile robots in dynamic and unpredictable environments.

- The challenge of designing robots that can handle a wide variety of tasks and adapt to changes in their operating environment is also discussed.

11.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speaker proposes a solution that involves the use of machine learning and AI to improve the adaptability and decision-making capabilities of the robots.

- This approach works because it allows the robots to learn from their experiences and adjust their behavior accordingly, thereby improving their performance over time.

11.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Q: How can we ensure that the robots can operate safely in dynamic environments?

- A: This can be achieved by incorporating advanced sensors and AI algorithms that allow the robots to perceive their environment and make decisions in real-time.

- Q: How can the robots adapt to changes in their operating environment?

- A: The robots can be designed to learn from their experiences and adjust their behavior accordingly. This can be achieved through machine learning and AI.

11.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the importance of adaptability in autonomous service mobile robots. By incorporating machine learning and AI, these robots can learn from their experiences and adapt to changes in their environment, thereby improving their performance and autonomy.

12. Robot Manipulation in the Logistics Industry

- The seminar is about the application of robot manipulation in the logistics industry. The speakers, Samir Menon, Robert Sun, and Kunal Shah, discuss their research and development in this field.

12.1. One or more of the research problems the speaker’s work address

- The speakers address the problem of efficient and accurate robot manipulation in the logistics industry. They focus on the challenges of handling a wide variety of objects, dealing with uncertainties in the environment, and ensuring the safety and efficiency of robotic systems.

12.2. What is the novel approach or idea behind the speaker’s solution, Why it works

- The speakers propose a novel approach that combines machine learning techniques with traditional control methods. This approach allows robots to learn from their experiences and improve their performance over time.

- The solution works because it leverages the strengths of both machine learning and control theory. Machine learning allows the robots to adapt to new situations and improve their performance, while control theory ensures stability and safety.

12.3. Questions that maybe asked by an unexperienced robotics engineer and Answers

- Q: How does the system handle a wide variety of objects?

- A: The system uses machine learning to learn from its experiences and adapt to new situations. It can handle a wide variety of objects by learning from its past interactions with similar objects.

- Q: How does the system deal with uncertainties in the environment?

- A: The system uses a combination of machine learning and control theory to deal with uncertainties. Machine learning allows the system to adapt to new situations, while control theory provides stability and safety.

- Q: How does the system ensure the safety and efficiency of the robotic systems?

- A: The system uses control theory to ensure stability and safety. It also uses machine learning to improve its performance over time, which increases efficiency.

12.4. Takeaway, the most important takeaway

- The most important takeaway from the seminar is the potential of combining machine learning with traditional control methods in robotic manipulation. This approach allows robots to adapt to new situations, handle a wide variety of objects, and improve their performance over time, which is crucial for the logistics industry.

13. List

Why would we want a multi-agent system unstable

Designing Robotic Grippers for Interaction with Real-World Environments

Incorporating Sample Efficient Monitoring into Learned Autonomy

From open-source to safety-certified robotic software

The Age of Human-Robot Collaboration: OceanOneK Deep-Sea Exploration

Towards Shape Changing Displays and Shape Changing Robots

Robotics algorithms that take people into account

DeXtreme: Transferring Agile In-Hand Manipulation from Simulations to Reality

Failure is Not an Option: Our Techniques at the DARPA Subterranean Challenge, Lessons Learned, and Next Steps

Robots in Dynamic Tasks: Learning, Risk, and Safety

Autonomous NASA robots breaking records on Mars

Perceiving, Understanding, and Interacting through Touch

Flexible Surgical Robots: Design, Sensing, and Control

Multi-Sensory Neural Objects: Modeling, Inference, and Applications in Robotics

Towards Generalizable Autonomy: Duality of Discovery & Bias

Adaptable Robotic Manipulation Using Tactile Sensors

Get in touch: Tactile perception for human-robot systems

Democratizing Robot Learning

Understanding the Utility of Haptic Feedback in Telerobotic Devices

Humanizing Robot Design

Representation Learning for Autonomous Robots

Robot Manipulation in the Logistics Industry

Deploying Autonomous Service Mobile Robots, And Keeping Them Autonomous

Distributional Representations and Scalable Simulations for Real-to-Sim-to-Real with Deformables

Leveraging Human Input to Enable Robust AI Systems

Computational Design of Compliant, Dynamical Robots

Using Data for Increased Realism with Haptic Modeling and Devices

Modeling and interacting with other agents

Towards Complex Language in Partially Observed Environments

Interactive Imitation Learning: Planning Alongside Humans

Toward Scalable Autonomy

Deconfliction, Or How to Keep Fleets of Fast-Flying Robots from Crashing into Each Other

Opening the Doors of (Robot) Perception: Towards Certifiable Spatial Perception Algorithms and Systems

Objects, Skills, and the Quest for Compositional Robot Autonomy

Considerations for Human-Robot Collaboration

Towards Robust Human-Robot Interaction: A Quality Diversity Approach

Distributed Perception and Learning Between Robots and the Cloud

Robotic Autonomy and Perception in Challenging Environments

Motion planning of bipedal robots based on Divergent Component of Motion

Challenges to Developing Low Cost Robotic Systems

Designing More Effective Remote Presence Systems for Human Connection and Exploration

Safety-Critical Control of Dynamic Robots

Safe and Robust Perception-Based Control

Toward robust manipulation in complex environments

Designing bioinspired aerial robots with feathered morphing wings

Mind the Gap: Bridging model-based and data-driven reasoning for safe human-centered robotics

Modeling and Control for Robotic Assistants

Self-Supervised Pseudo-Lidar Networks

Field-hardened Robotic Autonomy

Learning and Predictions in Autonomous Systems

Scaled Learning for Autonomous Vehicles

Model Predictive Control of Hybrid Dynamical Systems

Bay Area Robotics Symposium

Hands in the Real World: Grasping Outside the Lab

The Next Generation of Robot Learning

Practical Challenges of Urban Autonomous Driving

Formalizing Teamwork in Human-Robot Interaction

Avian Inspired Design

How to Make, Sense, and Make Sense of Contact in Robotic Manipulation

Crossing the Reality Gap: Coordinating Multirobot Systems in The Physical World

Safety Guarantees with Perception and Learning in the Loop

Autonomous, Agile, Vision-controlled Drones: from Frame-based to Event-based Vision

The Merits of Models in Continuous Reinforcement Learning

No Title

Paradigm shift of haptic human-machine interaction: Historical perspective and our practice

Reachability in Robotics

Sub-sampling approaches to mapping and imaging

Fabrication via Mobile Robotics and Digital Manufacturing

Lagrangian control at large and local scales in mixed autonomy traffic flow: optimization and deep-RL approaches

High-Performance Aerial Robotics

Fast computation of robust multi-contact behaviors

Taking robots to the cloud and other insights on the path to market

Conversation with a Robot

No Title

Learning Dexterity

L1 Adaptive Control and Its Transition to Practice

Learning Model-free Representations for Fast, Adaptive Robot Control and Motion Planning

Attack-Resilient and Verifiable Autonomous Systems: A Satisfiability Modulo Convex Programming Approach

The Devil Made Me Do it: Computational Ethics for Robots

Making Sense of the Physical World with High-resolution Tactile Sensing

Control-Theoretic Regularization for Nonlinear Dynamical Systems Learning

Assistance of Walking and Running Using Wearable Robots

Learning Representations for Planning

A Grand Challenge for E-Commerce: Optimizing Rate, Reliability, and Range for Robot Bin Picking and Related Projects

Human-Robot Teaming: From Space Robotics to Self-Driving Cars

Recent advances in surgical robotics: some examples through the LIRMM research activities illustrated in minimally invasive surgery and interventional radiology

Hopping Rovers for Exploration of Asteroids and Comets: Design, Control, and Autonomy

Safety and Efficiency in Autonomous Vehicles through Planning with Uncertainty

Planning for Contact

Law as Action: Profit-Driven Bias in Autonomous Vehicles

The Combinatorics of Multi-contact Feedback Control

Autonomous Scheduling of Agile Spacecraft Constellations for Rapid Response Imaging

Safety in Autonomy via Reachability

Design, Theoretical Modeling, Motion Planning , and Control of a Pressure-operated Modular Soft Robotic Snake

The Information Knot Tying Sensing and Control and the Emergence Theory of Deep Representation Learning

Biological and Robotic haptics

Learning Perception and Control for Agile Off-Road Autonomous Driving

Towards Generalizable Imitation in Robotics

Designing exoskeletons and prostheses that enhance human performance

Value sensitive design for autonomous vehicle motion planning

On Quantifying Uncertainty for Robot Planning and Decision Making

Self-Supervision for Robotic Learning

Numerical methods for modeling, simulation and control for deformable robots.

Semantic Understanding for Robot Perception

Persistent Coverage Control for Constrained Multi-UAV Systems

Recent Advances Enabling Autonomous Transportation

Safety-Critical Control for Dynamic Legged and Aerial Robotics

No Title

Combining learned and analytical models for predicting the effect of contact interaction

Combining learned and analytical models for predicting the effect of contact interaction

Evolvability and adaptation in robotic systems

Continuously Learning Robots

Probabilistic Numerics — Uncertainty in Computation