1. Goal

Highlight some of the progress in 2022 and vision for 2023

2. Language Vision and Generative models

2.1. Language

Language model, although the simple objective is to predict the next word, the results are quite amazing

Chain of Thought prompting, help the language model follow logical chain of thought

Trained on multi-language model, it shows translation ability

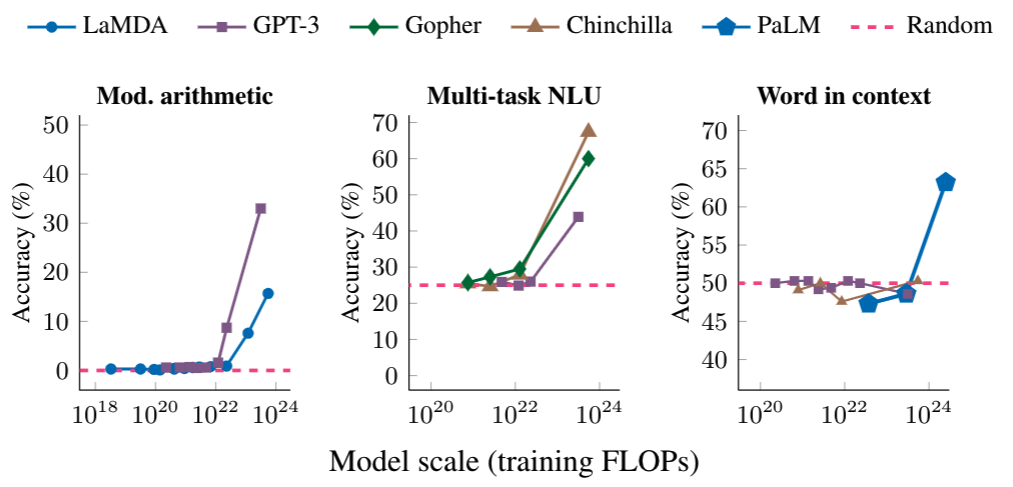

Scaling give new power

2.2. Vision

Use transformer architecture rather than CNN to utilize both local and non-local information

Understand 3D structure from one or few 2D images

2.3. Multimodal Models

Key questions:

- How much modality-specific processing should be done before merge?

- A few layer

- How to mix the representation the most effective?

- Through bottleneck

2.4. Generative Models

GAN developed in 2014, two part

- Generator

- Discriminator

Diffusion model: slowly destroy structure in a data distribution, then learn a reverse process that can restore the structure

- Can produce image but in a low quality

Contrstic language-iamge Pre-training and LLM can produce high quality result

Next challenging:

- video

- audio: larger data, and one-to-many relationship

3. ML and Computer System

3.1. Software

Distributed systems: Pathway

- AI model

- Sparse and efficient

TensorStore, partular useful for LLM

Jax related libraries, Rax, T5X

3.2. Hardware

TPU and GPU

Use ML in hardware design, use a approximation level

4. Aglorithm Advances

Scalable algorithm, using graphs, clustering and optimization

Privacy and federated learning

Maket algorithm and causal inference:

5. Robotics

More helpful?

- Communicate more efficient

- Understand common sense in real world

- Scaling the number of low-level skills

Using LLM

- Using the LLM to generate instruction (that robot can do)

- Do the job in a given situation

- Value function in RL

Scalable Data Problem

- physical skills present significant challenge to robot

- Moravec’s Paradox: reasoning require little computation but perception skills require enormous computation

Simulation:

- still gap between simulation and real world

- collect training data

6. Natural Science

ML to act on curiosity

Complexity of biology

- brain

- reconstruction of the neural of a zebrafish brain

- proteins

- genome

Quantum computing for new physics discoveries

7. TODO

Common sense in LLM?

huam cortex

fruit fly brain